For the most part, databases have become an integral part of any organization. More importantly, they have become mission critical. On top of this, many enterprise level databases are far larger than any disk you are likely to encounter. As an example, I was required to image a database that belonged to an insurance company. This database was 68TB in total size and it was business critical. The consequence is that you need to start thinking of other ways to do forensic work on databases.

As with all live system forensics, begin with gathering the evidence required starting from the most volatile and working toward that which is unlikely to change. When doing this, remember to:

- Protect the Audit Trail - Protect the audit trail so that audit information cannot be added, changed, or deleted.

- Access only pertinent data and limit your actions — In order to avoid cluttering the meaningful information and changing the evidence; plan and target all database activities before you start.

- ERDs (entity relationship diagrams) are your friend.

Triggers and T-SQL code for analysis are rarely added into databases, but you should check. There may be something that could provide additional levels of logging and recording already on the system. The transaction logs can recreate an entire database — these are essential.

Think of other areas to look...

One form of volatile data that is usually overlooked is the "Plan Cache". When a SQL statement is submitted to be parsed by the query processor, the query processor will identify the lowest computational cost strategy to retrieve the requested data — this is an execution plan. An execution plan can be recovered from the Plan Cache and used to reconstruct the activity (such as that of an attacker). This is a source of overlooked but highly volatile evidence that can be used to recreate the execution history from stored procedures, function execution and even command line SQL queries.

ERDs

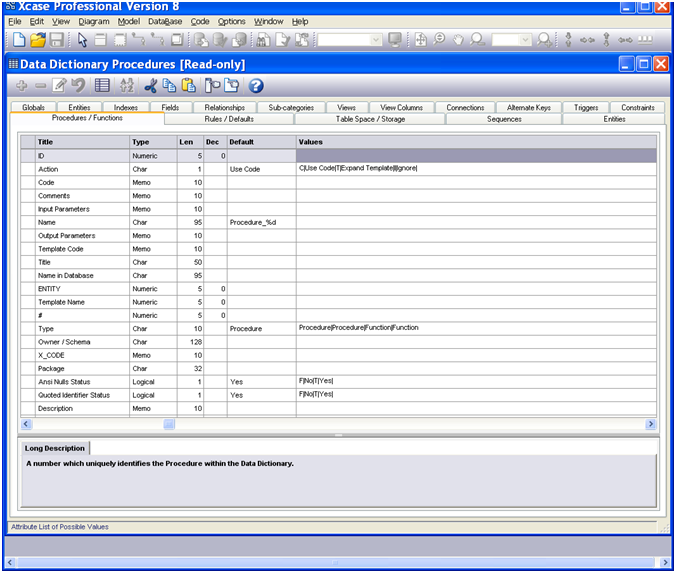

XCase and DbVisualiser are a couple great tools for database work. These map databases to create a visual map of the database and the tables. These are also known as CASE tools.

CASE tools can be a great aid to incident response and forensic work involving database systems. CASE or Computer Assisted Software Engineering tools not only help in the development of software and database structures but can be used to reverse engineer existing databases and check them against a predefined schema. There are a variety of both open source and commercial CASE tools.

With more and more commercial databases running over the terabyte size, standard command line SQL coding is unlikely to find all of the intricate relationships between these tables, stored procedures and other database functions. A CASE tool on the other hand can reverse engineer existing databases to produce diagrams that represent the database. These can be compared with existing schema diagrams to ensure that the database matches the architecture that it is originally built from and to quickly zoom in on selected areas. This can be done either from a live SQL system or a disk image.

Visual objects, colors and better diagrams may all be introduced to further enhance the capacity to analyze the structure. Reverse engineering a database will enable the determination of various structures that have been created within the database. Some of these include:

- The indexes,

- Fields,

- Relationships,

- Sub-categories,

- Views,

- Connections,

- Primary keys and alternate keys,

- Triggers,

- Constraints,

- Procedures and functions,

- Rules,

- Table space and storage details associated with the database,

- Sequences used and finally the entities within the database.

Each of the tables will also display detailed information concerning the structure of each of the fields that may be viewed at a single glance. In large databases a graphical view is probably the only method that will adequately determine if relationships between different tables and functions within a database actually meet the requirements. It may be possible in smaller databases to determine the referential integrity constraints between different fields, but in a larger database containing thousands of tables there is no way to do this in a simple manner using manual techniques.

It is not just security functions such as cross site scripting and SQL injection that need to be considered. Relationships between various entities and the rights and privileges that are associated with various tables and roles also need to be considered. The CASE tools allow us to visualize the most important security features associated with a database. These are:

- Schemas restrict the views of the database for users,

- Domains, assertions, checks and other integrity controls defined as database objects which may be enforced using the DBMS in the process of database queries and updates,

- Authorization rules. These are rules which identify the users and roles associated with the database and may be used to restrict the actions that a user can take against any of the database features such as tables or individual fields,

- Authentication schemes. These are schemes which can be used to identify users attempting to gain access to the database or individual features within the database.

- User defined procedures which may define constraints or limitations on the use of the database,

- Encryption processes. Many compliance regimes call for the encryption of selected data on the database. Most modern databases include encryption processes that can be used to ensure that the data is protected.

- Other features such as backup, check point capabilities and journaling help to ensure recovery processes for the database. These controls aid in database availability and integrity, two of the three legs of security.

CASE tools also contain other functions that are useful when conducting a forensic analysis of a database. One function that is extremely useful is model comparison.

Case tools allow the forensic analyst to:

- Present clear data models at various levels of detail using visual objects, colors and embedded diagrams to organize database schemas,

- Synchronize models with the database,

- Compare a baseline model to the actual database (or to another model),

Case tools can generate code automatically and also store this for review and baselining. This includes:

- DDL Code to build and change the database structure

- Triggers and Stored Procedures to safeguard data integrity

- Views and Queries to extract data

Model comparison involves comparing the model of the database with the actual database on the system. This can be used to ensure change control or to ensure that no unauthorized changes have been made and that the data integrity has been maintained. To do this, a baseline of the database structure will be taken at some point in time. At a later time the database could be reverse engineered to create another model and these two models could be compared. Any differences, variations or discrepancies between these would represent a change. Any changes should be authorized changes and if not, should be investigated. Many of the tools also have functions that provide detailed reports of all discrepancies.

Many modern databases run into the terabytes and contain tens of thousands of tables. A baseline and automated report of any differences, variations or discrepancies makes the job of finding a change on these databases much simpler. Triggers and stored procedures can be stored within the CASE tool itself. These can be used to safeguard data integrity. Ideally, selected areas within the database will have been set up such as honeytoken styled fields or views that can be checked against a hash at different times to ensure that no-one has altered any of these areas of the database. Further in database tables it should not change. Tables of hashes may be maintained and validated using the offline model that has stored these hash functions already. Any variation would be reported in the discrepancy report.

Next the capability to create a complex ERD or Entity Relationship Diagram in itself adds value to the engagement. Many organizations do not have a detailed structure of the database and these are grown organically over time with many of the original designers having left the organization. In this event it is not uncommon for the organization to have no idea about the various tables that they have on their own database.

Another benefit of CASE tools is their ability to migrate data. CASE tools have the ability to create detailed SQL statements and to replicate through reverse engineering the data structures. They can then migrate these data structures to a separate database that can be used for analysis offline. This is useful as the data can be copied to another system. That system may be used to interrogate tables without fear of damaging the data. In particular the data that has migrated to the tables does not need to be the actual data, meaning that the examiner does not have access to sensitive information but will know the defenses and protections associated with the database and can extract selected information without accessing all of the data.

This is useful as the examiner can then perform complex interrogations of the database that may result in damage to the database if it was running on the live system. This provides a capability for the examiner to validate the data in the database against the business rules and constraints that have been defined by the models and generate detailed integrity reports. This capability gives an organization advanced tools that will help them locate faulty data subsets and other sources of evidence through the use of automatically generated SQL statements.

Craig Wright is a Director with Information Defense in Australia. He holds both the GSE-Malware and GSE-Compliance certifications from GIAC. He is a perpetual student with numerous post graduate degrees including an LLM specializing in international commercial law and ecommerce law as well as working on his 4th IT focused Masters degree (Masters in System Development) from Charles Stuart University where he is helping to launch a Masters degree in digital forensics. He is starting his second doctorate, a PhD on the quantification of information system risk at CSU in April this year.