SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact Us

I was supposed to talk about AI security controls. That lasted about ten minutes.

At AI4’s roundtable on AI adoption challenges, I had 40 leaders in the room. Capital One, Edwards Air Force Base, the IMF, state government, insurance, manufacturing, the Better Business Bureau. We went around the room rating their organizations’ AI maturity on a scale of one to five. Most landed between two and four. A few fives.

Then someone asked a question that derailed the entire agenda.

“How do you handle shadow AI when your executives are already using banned tools?”

For the next two hours, we talked about nothing else. Not adversarial attacks. Not model security. Shadow AI.

Employees using tools the security team didn’t know about, couldn’t govern, and definitely couldn’t secure.

One financial services leader captured the organizational paralysis perfectly:

“The mental right now is we definitely don’t want to be left behind, but we definitely don’t want to be the first one to get sued.”

That’s not a strategy. That’s paralysis dressed up as caution.

But the moment that stuck with me came when I asked the executives a question: How much do you actually use ChatGPT? Because I was hearing leaders who wanted their teams to adopt AI but wouldn’t touch it themselves. I told them straight: “You’re trying to set internet strategy in the late 90s without ever having used a web browser or email.”

Then we got into personal risk. I asked how many had turned off data sharing to OpenAI. A few hands. Then I asked how many knew you need to do it on every instance. Web, phone, every device. The setting isn’t universal. Hands dropped. Half the room was checking their phones right there.

The point wasn’t to scare them. The point was this: if 40 sophisticated leaders across regulated industries don’t fully understand their own personal AI exposure, how are their organizations supposed to govern thousands of employees using these tools?

By October 2024, Software AG surveyed 6,000 knowledge workers across multiple countries. Half of all employees were using unapproved AI tools. Three-quarters were already using AI somewhere at work. Nearly half, 48%, said they would not stop even if banned.

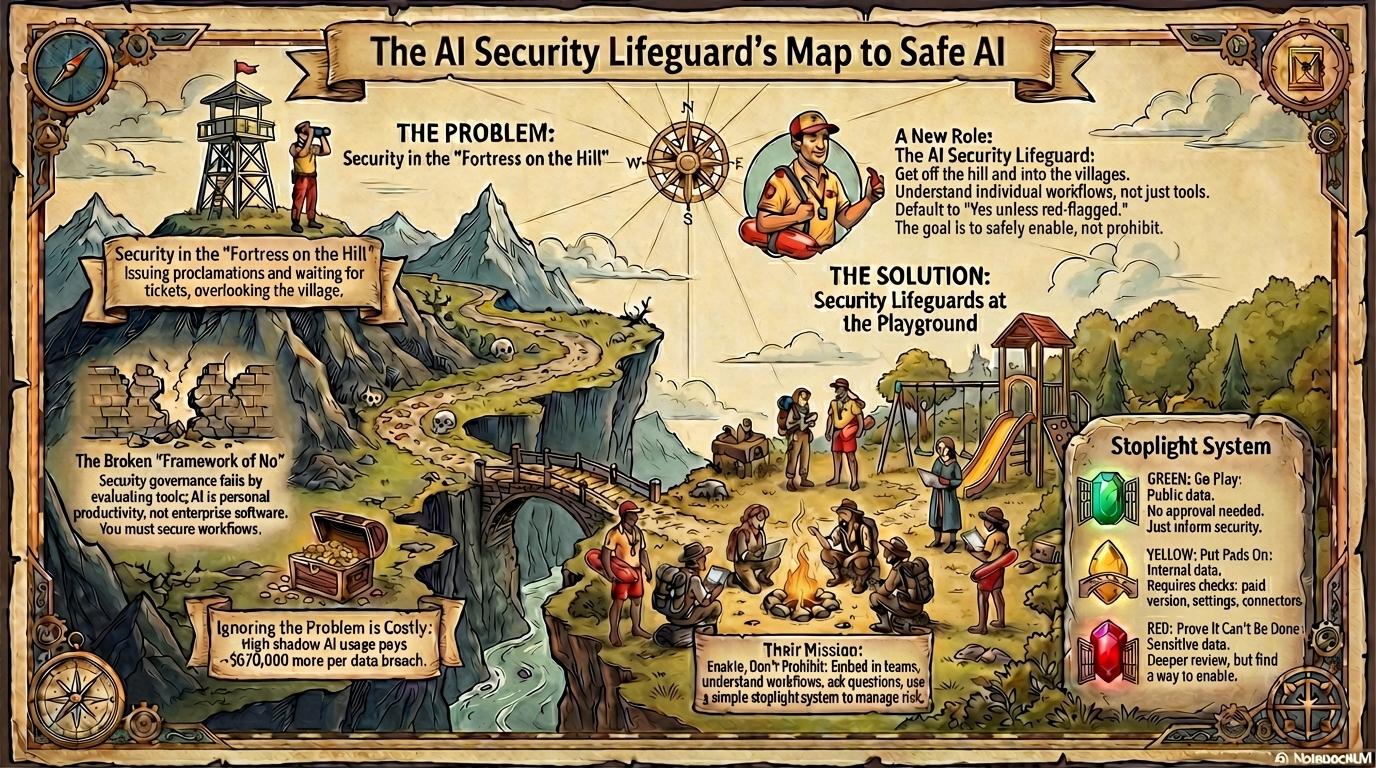

Shadow AI isn’t the edge case anymore. It’s the default. And the Framework of No (the deny-by-default policy that creates shadow AI) made it worse.

Here’s what’s quietly happening while security teams debate policy. In 2024, organizations with high shadow AI usage paid roughly $670,000 more per breach than those with low or no shadow AI, according to IBM’s 2025 Cost of a Data Breach report. Thirteen percent of organizations reported AI-related breaches. Another 8% didn’t know if they had one. And 97% of organizations lack basic access controls for AI tools employees are already using.

March 2024 research showed that at work, 96% of AI usage clusters around three vendors: OpenAI, Google, and Microsoft. If your proxy logs are missing those three, you’re not measuring reality. You’re measuring compliance theater.

We’re building 18-month procurement processes for tools employees adopted in 18 minutes. Criminal organizations test AI unrestricted while our defenses require four committees and a compliance review.

What do you do instead? Bring the innovation and the risk out of the shadows.

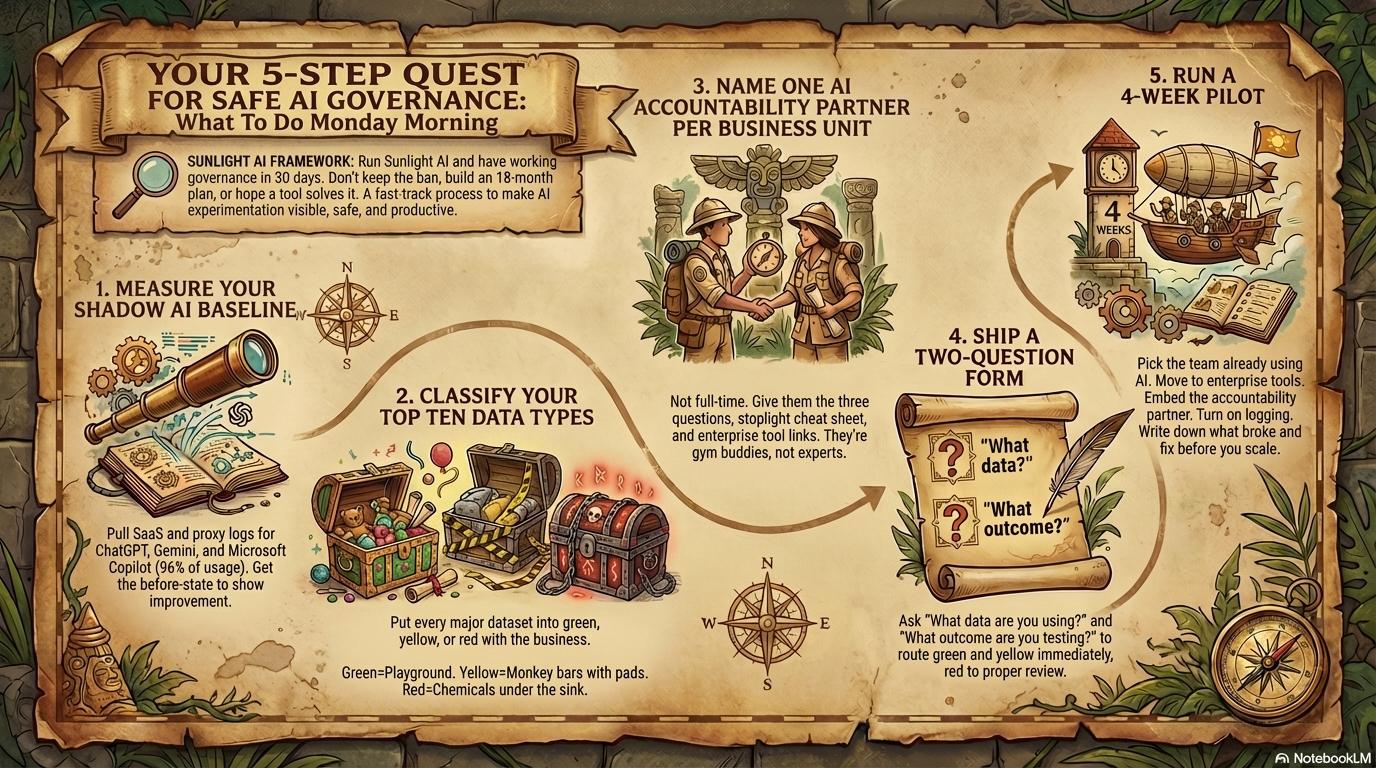

Sunlight AI is an operating system you can run Monday morning.

Here’s the problem with how security approaches AI. They’re sitting in a fortress on a hill, overlooking all the lands, hoping nothing bad happens. They evaluate tools from up there. They issue proclamations. They wait for tickets to arrive asking for permission.

But AI doesn’t work like that.

Security teams keep trying to evaluate it at the tool layer, like they’re approving Outlook. But here’s the thing about Outlook: everyone uses email differently. Some people live in their inbox. Some batch process twice a day. Some have elaborate folder systems. Some have zero. You can’t secure “how people use Outlook” by evaluating Outlook itself. You have to understand the individual workflows.

AI is the same, except worse. If I went around the room at that AI4 roundtable and asked everyone their use case, I’d get 40 different answers. Slide generation. Financial analysis. Editing kids’ photos. Code debugging. Calendar optimization. Agent-based news monitoring. Every single person uses AI differently because it’s personal productivity tooling, not enterprise software.

Security can’t govern this from the fortress on the hill. They have to venture out into the different villages.

Adopt a village. Be the Lifeguard of that village. Understand what people are actually trying to do before telling them they can’t do it.

Think about it like being a parent at a playground.

Your kid wants to go play. You have two choices:

Option one: No playground. There’s a 10% chance every single day that something bad happens. Scraped knee. Bumped head. Some other kid pushes them. Too risky. We’re staying home.

Option two: Yes, go play. I’m over here on the bench. I’m on my phone, talking to friends, listening to a podcast, enjoying the day. For the most part, nothing happens. But every now and then something goes wrong and I need to get involved. That’s fine. That’s my job.

The Framework of No is option one. No playground. Too risky.

Sunlight AI is option two. Yes, go play. I’m right here if something goes wrong.

Here’s what happens with option one. I’ve had friends who were way too helicopter about this. Carried their kids around everywhere until age three. Those kids ended up at a deficit in developmental skills compared to kids who were allowed to free roam a little bit. You could clearly tell when they were playing soccer. They weren’t used to getting bumped by another kid. They didn’t have the fine motor skills to navigate the ball around the field. Two three-year-olds on the field look exactly the same physically. One can’t do it. The other can.

The only difference is which parents saw that 10% risk and said “we’re okay with that” and let their kids develop.

Organizations that ban AI experimentation are creating the same developmental deficits in their workforce. They’ll be competing against companies whose employees have been building AI skills for two years while yours were waiting for permission.

The core principle: Reverse the default from “No until approved” to “Yes unless red-flagged.”

Like a parent at a playground: default to yes. Get involved when something actually goes wrong.

Your job is to enable, not prohibit.

The ratio I use is 60/40. Security needs to get to yes 60% of the time on routine requests. If you’re saying no more than you’re saying yes, you’re creating shadow AI, not preventing risk.

You can’t let your kids have access to poisons and chemicals underneath the sink. That’s a bad idea. Move those. Put a buckle on the cabinet door. But let them roam around the kitchen and explore things. High-risk areas get locked down. Everything else gets supervised exploration.

Same principle applies here. Most organizational data isn’t regulated. Most AI experiments don’t touch customer PII or trade secrets. But security teams treat every request like it might, which creates the bottleneck that creates shadow AI.

Stoplight classification fixes this by routing based on actual risk, not theoretical risk.

Public information. External websites. Published earnings. Marketing copy from public materials. Internal process docs without customer data or financials.

This is the equivalent of the open playground. No corporate secrets. Nothing corporate sensitive. Just straight creativity and productivity. Go free. Have fun. Learning how to write better. Learning how to analyze public data. Learning how to generate graphics from public sources.

In March 2024, only 0.5% of retail workers and 0.6% of manufacturing workers put corporate data into AI tools. Across industries, 27.4% of data employees used with AI was sensitive. That means 72.6% was non-sensitive work you can green-light today. (Cyberhaven)

No questions needed. Just let security know how the tool is being used so patterns can emerge.

Internal presentations without financials or PII. Strategy materials pre-signature. Draft contracts before client details. Competitive analysis using internal research. Roadmaps marked confidential.

This is like the monkey bars. Go ahead, but let me check you out real quick before you do.

At the AI4 roundtable, HR emerged as one of the fastest AI adopters across multiple organizations. One insurance company ran nine weeks of workshops with about 60 HR staff and hit 60% saturation. They started with tasks people already understood. That’s yellow-zone work: internal, not regulated, high productivity gain.

The AI Security Lifeguard in the village asks three things:

Total setup: about 15 minutes.

HIPAA records. GDPR-covered personal data. Customer financials. Trade secrets. HR data with employee information. Anything that could result in a lawsuit or regulatory fine.

These are the chemicals under the sink. The cabinet gets a buckle.

One banking leader at the roundtable flagged HR data as particularly sensitive. “Some of the most sensitive internal data that you have.” Another described the core problem in financial services: “We clearly know AI is going to boost productivity... but the compliance data and privacy is the first bottleneck.”

Under GDPR Article 5(1)(c), personal data must be “adequate, relevant and limited to what is necessary.” Under the EU AI Act, training, validation, and test data for high-risk systems must meet documented quality and governance criteria. These carry penalties measured in tens of millions or percentages of global turnover.

But here’s the critical part: Even in the red zone, security still has to get to yes.

The answer can’t just be “no, too risky.” The answer has to be “here’s how we enable this safely” or “I’ve exhausted every option and it genuinely can’t be done.”

Red-zone timeline: days to weeks, not months. Set a two-to-three-week deadline for answers, not six months. If security can’t find a solution in that window, they run the Reddit test. Show me it’s a true no. Otherwise I’m assuming it’s a yes and you just haven’t done your homework.

When your security team says a red-zone request is impossible, challenge them to post anonymously on Reddit with their use case and conclusion. Why? Cybersecurity practitioners validate in real-time with the security community. Reddit’s r/cybersecurity, r/netsec, and vendor-specific subs are where working professionals pressure-test assumptions daily.

Example: “I have financial data I want an AI tool to touch. I can’t figure out a way to secure it because it’s too risky. I am confident I’m right. Tell me this thing doesn’t exist.”

Post it. Wait three days. See what happens.

The Reddit community will either fully agree or tell them they’re an idiot. Either answer is useful. If the community agrees it can’t be done, it’s a no. If they offer solutions, security needs to investigate those before closing the ticket.

Here’s why this works: your security team’s default assumption is that they’ve done all the due diligence. My default assumption is they haven’t used the community resources available. Reddit is the ultimate town square. It’s a great way to punch holes in assumptions. And it forces learning, which is the actual job.

On April 10, 2025, Oxford University launched an AI Ambassador Programme. Around 70 current staff and faculty across departments, colleges, libraries, and central services would take on “AI ambassador” as part of their day jobs. Their job: answer practical questions, help teams use university‑supported AI tools safely, and feed real needs back to the central AI team. Not a central committee. Not permission slips. People in the villages, helping other villagers.

I hate the term “AI champion.” It sounds like you know what the hell you’re doing. Nobody knows what they’re doing. Not security. Not the business units. Not the executives. Everyone’s figuring this out as they go.

I like “accountability partner” better. Think gym buddy. “Hey, I’m going to show up at the gym today. I’m completely out of shape. But I’m going to be here at 8am if anyone wants to come hang out and work out with me. We’ll be here every day from here forward. We could both stumble through looking ridiculous lifting weights.”

That’s what the AI Accountability Partner’s role should be in each business unit. Not the expert. Not the approver. The person who shows up, asks the stoplight questions, and helps figure it out together.

At the AI4 roundtable, one of the most effective models came from an insurance company. They had an “AI evangelist” who sat at a desk, answered calls, had consultations, and just listened to how people did their work. Just presence and conversation. They pushed from the bottom up while the C-suite pushed from the top down. Result: 60% adoption saturation and five custom tools built internally.

GitHub’s internal playbook recommends 30 to 60 minutes weekly, framed as “choose your own adventure.” Pragmatic and sustainable.

Job description: Attend one team meeting per week. Ask stoplight questions when AI comes up. Flag red zones. Enable green and yellow experiments. If you don’t know something, say so and come back with an answer. You’re not the expert. You’re the person who shows up.

One per business unit to start. Embedded where experiments actually happen, not waiting for tickets in the fortress on the hill.

Shadow AI isn’t coming. It’s here. The question is whether you’ll manage it or pretend the ban is working.

In October 2024, half of all employees were using unapproved AI tools. Three-quarters were using AI somewhere at work. 48% said they wouldn’t stop even if banned. At organizations with high shadow AI levels, breach costs ran $670,000 higher than those with low or no shadow AI.

The EU AI Act’s prohibitions took effect in early February 2025. High-risk obligations land in August 2026. Colorado’s AI Act becomes enforceable June 30, 2026. California AB 2013 requires developers to disclose specified training-data elements starting January 1, 2026.

OpenAI’s March 20, 2023 outage exposed payment information for approximately 1.2% of ChatGPT Plus subscribers during a nine-hour window. Samsung banned employee use after engineers pasted source code into ChatGPT, and later moved toward allowing use again under tighter internal controls and its own AI tooling. Managed experimentation doesn’t mean zero incidents. It means you see them sooner, contain them faster, and explain them to a regulator with logs instead of hope.

Goldman Sachs rolled out a firm-wide AI assistant after piloting with roughly 10,000 users. Oxford built their ambassador network. GitHub published guidance. But few organizations announce “we reversed our ban” even though that’s exactly what hundreds are doing in practice.

You have four choices:

If you pick option four:

1. Measure your shadow AI baseline

Pull SaaS and proxy logs for ChatGPT, Gemini, and Microsoft Copilot. Those three vendors account for 96% of workplace AI usage. Get the before-state so you can show improvement.

2. Classify your top ten data types

Put every major dataset into green, yellow, or red. Do this with the business, not to the business. Green is the playground. Yellow is monkey bars with pads. Red is chemicals under the sink.

3. Name one AI accountability Partner per business unit

Not full-time. Give them the three questions, the stoplight cheat sheet, and the enterprise tool links. They’re not experts. They’re gym buddies who show up.

4. Ship a two-question form

“What data are you using?” and “What outcome are you testing?” Enough to route green and yellow immediately, red to proper review.

5. Pick the team already using AI and run a four-week pilot

Don’t invent a use case. Find the usage already in the wild. Move it to enterprise tools. Embed the accountability partner. Turn on logging. Write down what broke and fix that before you scale.

The damned-if-you-do trap: Here’s the thing about shadow AI. It’s the result of organizations fumbling along, not sure how to use the new technology. But if it weren’t for people playing around and experimenting, there would be no use cases at all. It’s damned if you do, damned if you don’t. Sunlight AI breaks that trap by making the experimentation visible instead of forbidden.

Resourcing: Large enterprises need real programs. In our 2025 research and CISO roundtables, governance teams staffed in low double digits with budgets that look like platforms, not side projects. Federal agencies requested $1.9 billion in AI R&D for FY 2024. OMB directed designation of Chief AI Officers.

Training: At the AI4 roundtable, one participant nailed the gap: “We’ve developed great educational resources, but they are optional. You can get access to Gemini but have no idea how to use prompting. I would like for us to require training if we are going to give access.” She’s right. Accountability partners need business context, working knowledge of data classification, and familiarity with the stoplight system. Plan for continuous learning.

The helicopter parent problem: Some organizations will refuse to let their people on the playground. They’ll cite the 10% risk every day and keep everyone home. Those organizations will produce employees who can’t compete. You’ll see it when they’re playing soccer against companies that allowed exploration. The developmental deficit is real.

At the end of the AI4 roundtable, I told the room something I tell every audience: “Anyone who claims they know a lot about AI is completely... I am in this. I am learning. I am constantly struggling.”

That’s not false modesty. That’s the reality everyone in that room was living. The executives who admit they’re learning can actually empower their teams to experiment. The ones who pretend they have answers while not touching the tools themselves create the paralysis that creates shadow AI.

The organizations that survive the next three years won’t be the ones with the strictest AI policies. They’ll be the ones whose policies matched reality.

Get off the hill. Go into the villages. Sit on the bench at the playground. Let people develop. Put pads on them for yellow. Lock the cabinet for red. Make security prove when something truly can’t be done.

That’s Sunlight AI. Not more permissive. More honest about where the risk actually lives.

Rob T. Lee is Chief AI Officer & Chief of Research, SANS Institute

Sources:

1 Software AG. Press release and white paper. Oct 22, 2024. Link

2 Rob T. Lee. Sleep. Diet. Exercise. AI. “The Framework of No: Why Your Security Team is Killing Your AI Momentum.” Link

3 2025 Cost of a Data Breach Report: Navigating the AI rush without sidelining security. Link

4 Cyberhaven Labs. “Shadow AI: how employees are leading the charge.” May 21, 2024. Link

5 Art. 5 GDPR Principles relating to processing of personal data Link

6 Dutch DPA. “Decision: fine Clearview AI.” Sept 3, 2024. Link

7 Yale New Haven Health Services Corporation Settlement Link

8 HIPAA Journal. “Conduent anticipates data-breach cost.” Nov 11, 2025. Link

9 Oxford University. “Introducing the AI Ambassador programme.” Apr 23, 2025. Link

10 GitHub Resources. “Playbook series: Activating internal AI champions” August 29, 2025 Link

11 Software AG. Press release and white paper. Oct 22, 2024. Link

12 IBM Security. Cost of a Data Breach Report 2025. 2025. Link

13 Greenberg Traurig. “Colorado delays comprehensive AI law...” Sept 5, 2025. Link

14 Goodwin Law. “California’s AB 2013.” Jun 2025. Link

15 OpenAI. “March 20 ChatGPT outage: Here’s what happened.” Mar 24, 2023. Link

16 TechCrunch. “Samsung bans use of generative AI tools like ChatGPT after April internal data leak.” May 2, 2023 Link

17 Reuters. “Goldman Sachs launches AI assistant firmwide...” Jun 23, 2025. Link

18 Cyberhaven Labs. “Shadow AI: how employees are leading the charge.” May 21, 2024. Link

19 GAO. Artificial Intelligence: Agencies Are Implementing Management Requirements. Sept 2024. Link

Rob T. Lee is Chief AI Officer and Chief of Research at SANS Institute, where he leads research, mentors faculty, and helps cybersecurity teams and executive leaders prepare for AI and emerging threats.

Read more about Rob T. Lee