SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsComing in much later than I'd hoped, this is the second installment in a series of four discussing security intelligence principles in computer network defense. If you missed the introduction (parts 1 and 2), I highly recommend you read it before this article, as it sets the stage and vernacular for intelligence-driven response necessary to follow what will be discussed throughout the series. Once again, and as often is the case, the knowledge conveyed herein is that of my associates and I, learned through many man-years attending the School of Hard Knocks (TM?), and the credit belongs to all of those involved in the evolution of this material.

Just like you or I, adversaries have various computer resources at their disposal. They have favorite computers, applications, techniques, websites, etc. It is these fundamentally human tendencies and technical limitations that we exploit by collecting information on our adversaries. No person acts truly random, and no person has truly infinite resources at their disposal. Thus, it behooves us in CND to record, track, and group information on our sophisticated adversaries to develop profiles. With these profiles, we can draw inferences, and with those inferences, we can be more adaptive and effectively defend our data. After all, that's what intelligence-driven response is all about: defending data that sophisticated adversaries want. It's not about the computers. It's not about the networks. It's about the data. We have it, and they want it.

Indicators can be classified a number of ways. Over the years, I and my colleagues have wrestled with the most effective way to break them down. Currently, I am of the mind that indicators fall into one of three types: atomic, computed, and behavioral (or TTP's)

Atomic indicators are pieces of data that are indicators of adversary activity on their own. Examples include IP addresses, email addresses, a static string in a Covert Command-and-control (C2) channel, or fully-qualified domain names (FQDN's). Atomic indicators can be problematic, as they may or may not exclusively represent activity by an adversary. For instance, an IP address from whence an attack is launched could very likely be an otherwise-legitimate site. Atomic indicators often need vetting through analysis of available historical data to determine whether they exclusively represent hostile intent.

Computed indicators are those which are, well, computed. The most common amongst these indicators are hashes of malicious files, but can also include specific data in decoded custom C2 protocols, etc. Your more complicated IDS signatures may fall into this category.

Behavioral indicators are those which combine other indicators — including other behaviors - to form a profile. Here is an example: ?Bad guy 1 likes to use IP addresses in West Hackistan to relay email through East Hackistan and target our sales folks with trojaned word documents that discuss our upcoming benefits enrollment, which drops backdoors that communicate to A.B.C.D.' Here we see a combination of computed indicators (Geolocation of IP addresses, MS Word attachments determined by magic number, base64 encoded in email attachments) , behaviors (targets sales force), and atomic indicators (A.B.C.D C2). To borrow some parlance, these are also referred to as Tactics, Techniques, and Procedures (TTP's). Already you can probably see where we're going with intelligence-driven response? what if we can detect, or at least investigate, behavior that matches that which I describe above?

One likes to think of indicators as conceptually straightforward, but the truth is that proper classification and storage has been elusive. I'll save the intricacies of indicator difficulties for a later discussion.

The behavioral aspect of indicators deserves its own section. Indeed, most of what we discuss in this installment centers on understanding behavior. The best way to behaviorally describe an adversary is by how he or she does his job — after all, this is the only discoverable part for an organization that is strictly CND (some of our friends in the USG likely have better ways of understanding adversaries). That "job" is compromising data, and therefore we describe our attacker in terms of the anatomy of their attacks.

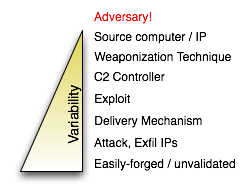

Ideally, if we could attach a human being to each and every observed activity on our network and hosts, we could easily identify our attackers, and respond appropriately every time. At this point in history, that sort of capability passes beyond ?pipe dream' into ?ludicrous.' However mad this goal is, it provides a target for our analysis: we need to push our detection "closer" to the adversary. If all we know is the forged email address an adversary tends to use in delivering hostile email, assuming this is uniquely linked to malicious behavior, we have a mutable and temporal indicator upon which to detect. Sure, we can easily discover when it's used in the future, and we are obliged to do so as part of our due diligence. The problem is this can be changed at any time, on a whim. If, however, the adversary has found an open mail relay that no one else uses, then we have found an indicator "closer" to the adversary. It's much more difficult (though, in the scheme of things, still somewhat easy) to find a new open mail relay to use than it is to change the forged sending address. Thus, we have pushed our detection "closer" to the adversary. Atomic, computed, and behavioral indicators can describe more or less mutable/temporal indicators in a hierarchy. We as analysts seek the most static of all indicators, at the top of this list, but often must settle for indicators further from the adversary until those key elements reveal themselves. The figure below shows some common indicators of an attack, and where we've seen them fall in terms of proximity to the adversary, variability, and inversely mutability and temporality.

That this analysis begins with the adversary and then dovetails into defense makes it very much a security intelligence technique as we've defined the term. Following a sophisticated actor over time is analogous to watching someone's shadow. Many factors influence what you see, such as the time of day, angle of sun, etc. After you account for these variables, you begin to notice nuances in how the person moves, observations that make the shadow distinct from others. Eventually, you know so much about how the person moves that you can pick them out of a crowd of shadows. However, you never know for sure if you're looking at the same person. At that point, for our purposes, it doesn't matter. If it looks like a duck, and sounds like a duck? it hacks like a duck. Whether the same person (or even group) is truly at the other end of behavior every time is immaterial if the profile you build facilitates predicting future activity and detecting it.

We have found that the phases of an attack can be described by 6 sequential stages. Once again loosely borrowing vernacular, the phases of an operation can be described as a "cyber kill chain." The importance here is not that this is a linear flow - some phases may occur in parallel, and the order of earlier phases can be interchanged - but rather how far along an adversary has progressed in his or her attack, the corresponding damage, and investigation that must be performed.

The reconnaissance phase is straightforward. However, in security intelligence, often times this is manifested not in portscans, system enumeration, or the like. It is the data equivalent: browsing websites, pulling down PDF's, learning the internal structure of the target organization. A few years ago I never would've believed that people went to this level of effort to target an organization, but after witnessing it happen, I can say with confidence that it does. The problem with activity in this phase is that it is often indistinguishable from normal activity. There are precious few cases where one can collect information here and find associated behavior in the delivery phase matching an adversary's behavioral profile with high confidence and a low false positive rate. These cases are truly gems — when they can be identified, they link what is often two normal-looking events in a way that greatly enhances detection.

The weaponization phase may or may not happen after reconnaissance; it is placed here merely for convenience. This is the one phase that the victim doesn't see happen, but can very much detect. Weaponizaiton is the act of placing malicious payload into a delivery vehicle. It's the difference in how a Soviet warhead is wired to the detonator versus how a US warhead is wired in. For us, it is the technique used to obfuscate shellcode, the way an executable is packed into a trojaned document, etc. Detection of this is not always possible, nor is it always predictable, but when it can be done it is a highly effective technique. Only by reverse engineering of delivered payloads is an understanding of an adversary's weaponization achieved. This is distinctly separate and often persistent across the subsequent stages.

Delivery is rather straightforward. Whether it is an HTTP request containing SQL injection code or an email with a hyperlink to a compromised website, this is the critical phase where the payload is delivered to its target. I heard a term just the other day that I really like: "warheads on foreheads" (courtesy US Army).

The compromise phase will possibly have elements of a software vulnerability, a human vulnerability aka "social engineering," or a hardware vulnerability. While the latter are quite rare by comparison, I include hardware vulnerabilities for the sake of completeness.

The compromise of the target may itself be multi-phase, or more straightforward. As a result, we sometimes have the tendency to pull apart this phase into separate sub-phases, or peel out "Compromise" and "Exploit" as wholly separate. For simplicity's sake, we'll keep this as a single phase. A single-phase exploit results in the compromised host behaving according to the attacker's wishes directly as a result of the successful execution of the delivered payload. For example, if an attacker coaxes a user into running an EXE attachment to an email which contained the desired backdoor code. A multi-phase exploit typically will involve delivery of shellcode whose sole function is to pull down and execute more capable code upon execution. Shellcode often needs to be portable for a variety of reasons, necessitating such an approach. We have seen other cases where, possibly through sheer laziness, adversaries end up delivering exploits whose downloaders download other downloaders before finally installing the desired code. As you can imagine, the more phases involved, the lower an adversary's probability for success.

This is the pivotal phase of the attack. If this phase completes successfully, what we as security analysts have classically called "incident response" is initiated: code is present on a machine that should not be there. However, as will be discussed later, the notion of "incident response" is so different in intelligence-driven response (and the classic model so inapplicable) that we have started to move away from using the term altogether. The better term for security intelligence is "compromise response," as it removes ambiguity from the term "incident."

The command-and-control phase of the attack represents the period after which adversaries leverage the exploit of a system. A compromise does not necessarily mean C2, just as C2 doesn't necessarily mean exfiltration. In fact, we will discuss how this can be exploited in CND, but recognize that successful communications back to the adversary often must be made before any potential for impact to data can be realized. This can be prevented intentionally by identifying C2 in unsuccessful past attacks by the same adversary resulting in network mitigations, or fortuitously when adversaries drop malware that is somehow incompatible with your network infrastructure, to give but two examples.

In addition to the phone call going through, someone has to be present at the other end to receive it. Your adversaries take time off, too... but not all of them. In fact, a few groups have been observed to be so responsive that it suggests a mature organization with shifts and procedures behind the attack more refined than that of many incident response organizations.

We will also lump lateral movement with compromised credentials, file system enumeration, and additional tool dropping by adversaries broadly into this phase of the attack. While an argument can be made that situational awareness of the compromised environment is technically "exfiltration," the intention of the next phase is somewhat different.

The exfiltration phase is conceptually very simple: this is when the data, which has been the ultimate target all along, is taken. Previously I mentioned that gathering information about the environment of the compromised machine doesn't fall into the exfiltration phase. The reason for this is that such data is being gathered to serve but one purpose, either immediately or longer-term: facilitate gathering of sensitive information. The source code for the new O/S. The new widget that cost billions to develop. Access to the credit cards, or PII.

As we analyze attacks, we begin to see that different indicators map to the phases above. While an adversary may attempt to use the exploit du jour to compromise target systems, the backdoor (C2) may be the same as past attacks by the same actor. Different proxy IP addresses may be used to relay an attack, but the weaponization may not change between them. These immutable, or infrequently-changing properties of attacks by an adversary make up his/her/their behavioral profile as we discussed in moving detection closer to the adversary. It's capturing, knowing, and detecting this modus operandi that facilitates our discovery of other attacks by the same adversary, even if many other aspects of the attack change.

This need for the accumulation of indicators for detection means that analysis of unsuccessful attacks is important, to the extent that the attack is believed to be related to an APT adversary. A detection of malware in email by perimeter anti-virus, for instance, is only the beginning when the weaponization is one commonly used by a persistent adversary. The backdoor that would have been dropped may contain a new C2 location, or even a whole new backdoor altogether. Learning this detail, and adjusting sensors accordingly, can permit future detection when that tool or infrastructure is reused, even if detection at the attack phase fails. Discovery of new indicators also means historical searches may reveal past undetected attacks, possibly more successful than the latest one.

Analysis of attacks quickly becomes complicated, and will be further explored in future entries culminating with a new model for incident response.

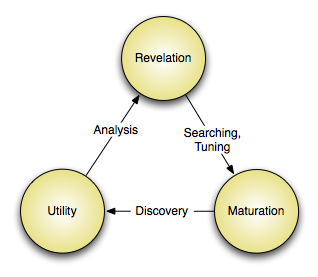

As a derivative (literary, not mathematical) of the analysis of attack progression, we have the indicator lifecycle. The indicator lifecycle is cyclical, with the discovery of known indicators begetting the revelation of new ones. This lifecycle further emphasizes why the analysis of attacks that never progress past the compromise phase are important.

The revelation of indicators comes from many places - internal investigations, intelligence passed on by partners, etc. This represents the moment that an indicator is revealed to be significant and related to a known-hostile actor.

This is the point where the correct way to leverage the indicator is identified. Sensors are updated, signatures written, detection tools put in the correct place, development of a new tool makes observation of the indicator possible, etc.

This is the point at which the indicator's potential is realized: when hostile activity at some point of the cyber kill chain is detected thanks to knowledge of the indicator and correct tuning of detection devices, or data mining/trend analysis revealing a behavioral indicator, for example. And of course, this detection and the subsequent analysis likely reveals more indicators. Lather, rinse, repeat.

In the next section, I will walk through a few examples and illustrate how following the attack progression forward and backward leads to a complete picture of the attack, as well as how attacks can be represented graphically. Following that will be our new model of network defense which brings all of these ideas together. You can expect amplifying entries thereafter to further enhance detection using security intelligence principles, starting with user modeling.

Michael is a senior member of Lockheed Martin's Computer Incident Response Team. He has lectured for various audiences including SANS, IEEE, and the annual DC3 CyberCrime Convention, and teaches an introductory class on cryptography. His current work consists of security intelligence analysis and development of new tools and techniques for incident response. Michael holds a BS in computer engineering, has earned GCIA (#592) and GCFA (#711) gold certifications alongside various others, and is a professional member of ACM and IEEE.